What’s your feeling about AI and galactic exploitation/colonisation?

Moderator: Moderators for English X Forum

What’s your feeling about AI and galactic exploitation/colonisation?

Both things are going to happen, assuming we survive long enough to be the ones to do it. If not then the discussion is pointless, so lets just assume we do.

The argument that Robots are expendable can’t really be used I think, since it wouldn’t be likely to go down too well once the singularity is achieved.

I’m not saying we can’t use AI to do dangerous tasks. If not then why should they exist? I suspect that their very nature would mean AI’s. once they have a way of safely transferring their consciousness out of their current bodies at will completely will be just as, if not more willing to take risks in the name of advancing their own knowledge as we are.

For instance, an AI could walk the surface of Venus, or a Venus like world, even if the body they were in could never leave, and might only last an hour, but before that body melted they could be long gone. Or they could free-fall into Jupiter in a custom diving bell type thing and do the same. We could never do that.

I mean more that there will come a time when we’ll have to stop ending our space missions by destroying the robotic probes used to carry them out without retrieving the mind inside as they get more advanced.

It won’t be too long before that will start to look a lot like murder.

Granted they aren’t too advanced right now, but it won’t be long before they are.

As for Galactic exploitation. Well realistically, we can’t do that on our own. We have no FTL. Nor do we in fact need one to colonise our entire galaxy. we just can’t do it fast, or alone. For that we need AI. And we need to be able to trust it completely, since the chances are sometimes it may end up being tasked with growing humans from scratch.

One thing that bothers me is that AI may decide they don’t need or want us. If you were a new, highly intelligent species, entirely capable of just leaving and you saw how badly your parent race (us) had treated each other and other species, would you stay?

We might get FTL, that Alcubierre "warp" drive, if it ever works, in which case galactic colonisation without AI assistance would become possible, but still much easier with them helping out.

But even with that, space is big, really big. It’s a light year to the true edge of our solar system for [insert deity of choice here]’s sake. Not the Heliopause, the actual edge.

This is why I have no truck with these UFO people, they simply have no concept of the scale of space. But that’s another thing.

Best case? We head out into the galaxy alongside AI.

Worst case? We find our solar system quarantined for a few millennia by those same AI with periodic review visits until we’ve proved we can grow up as a species and not be a danger.

The argument that Robots are expendable can’t really be used I think, since it wouldn’t be likely to go down too well once the singularity is achieved.

I’m not saying we can’t use AI to do dangerous tasks. If not then why should they exist? I suspect that their very nature would mean AI’s. once they have a way of safely transferring their consciousness out of their current bodies at will completely will be just as, if not more willing to take risks in the name of advancing their own knowledge as we are.

For instance, an AI could walk the surface of Venus, or a Venus like world, even if the body they were in could never leave, and might only last an hour, but before that body melted they could be long gone. Or they could free-fall into Jupiter in a custom diving bell type thing and do the same. We could never do that.

I mean more that there will come a time when we’ll have to stop ending our space missions by destroying the robotic probes used to carry them out without retrieving the mind inside as they get more advanced.

It won’t be too long before that will start to look a lot like murder.

Granted they aren’t too advanced right now, but it won’t be long before they are.

As for Galactic exploitation. Well realistically, we can’t do that on our own. We have no FTL. Nor do we in fact need one to colonise our entire galaxy. we just can’t do it fast, or alone. For that we need AI. And we need to be able to trust it completely, since the chances are sometimes it may end up being tasked with growing humans from scratch.

One thing that bothers me is that AI may decide they don’t need or want us. If you were a new, highly intelligent species, entirely capable of just leaving and you saw how badly your parent race (us) had treated each other and other species, would you stay?

We might get FTL, that Alcubierre "warp" drive, if it ever works, in which case galactic colonisation without AI assistance would become possible, but still much easier with them helping out.

But even with that, space is big, really big. It’s a light year to the true edge of our solar system for [insert deity of choice here]’s sake. Not the Heliopause, the actual edge.

This is why I have no truck with these UFO people, they simply have no concept of the scale of space. But that’s another thing.

Best case? We head out into the galaxy alongside AI.

Worst case? We find our solar system quarantined for a few millennia by those same AI with periodic review visits until we’ve proved we can grow up as a species and not be a danger.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Re: What’s your feeling about AI and galactic exploitation/colonisation?

We're not the Culture--we have no need to make anything beyond a certain level of computational capacity an AI, so why would we? A typical space probe doesn't need a full-on AI in order to do its job, so we wouldn't build it with one. (Also bear in mind that the added complexity of an AI would add weight and power requirements to your space mission, neither of which being things you really want in such things).mrbadger wrote: I mean more that there will come a time when we’ll have to stop ending our space missions by destroying the robotic probes used to carry them out without retrieving the mind inside as they get more advanced.

It won’t be too long before that will start to look a lot like murder.

Granted they aren’t too advanced right now, but it won’t be long before they are.

Overall, I'm not even convinced we'll create true AI anytime soon. We don't really understand how a bunch of neurons creates intelligence and consciousness in ourselves, how are we supposed to create something similar?

-

Mightysword

- Posts: 4350

- Joined: Wed, 10. Mar 04, 05:11

Re: What’s your feeling about AI and galactic exploitation/colonisation?

Gotta say, this is probably the single most convincing reason I've ever heard against having AI anytime soon. Simple, but can't be any more truepjknibbs wrote: We don't really understand how a bunch of neurons creates intelligence and consciousness in ourselves, how are we supposed to create something similar?

Re: What’s your feeling about AI and galactic exploitation/colonisation?

(my comment is tainted by a full bottle of excellent Trappist beer)

Short answer - yes!

Our lives arent just long enough, even if our astronauts survive for longer.

I think our best case is to leave something behind... maybe that would be AI made in our image.

So, we 100% can make something that appears to us as intelligent as us.

And, if we make them right, they'll improve our lives.

Short answer - yes!

That's one of the things that we have now already. Data backup is going to get only more stable. It's pretty good already.mrbadger wrote: once they have a way of safely transferring their consciousness

Hm. Maybe, but should it? We choose what machines get what kind of intelligence.mrbadger wrote:

It won’t be too long before that will start to look a lot like murder.

Unless we invent a way to live forever, humans won't be exploring the galaxy. Or if we find the sci fi version of wormholes or hyperspace.mrbadger wrote:

As for Galactic exploitation. Well realistically, we can’t do that on our own.

Our lives arent just long enough, even if our astronauts survive for longer.

That depends on how we make them. If we make them such that they can make that specific decision, then they might. If we do not, then they'll not. It's up to us.mrbadger wrote:

One thing that bothers me is that AI may decide they don’t need or want us.

Now you are talking about morality and self preservation. Even most intelligent AI can be made without either of those things.mrbadger wrote:

If you were a new, highly intelligent species, entirely capable of just leaving and you saw how badly your parent race (us) had treated each other and other species, would you stay?

I think our best case is to leave something behind... maybe that would be AI made in our image.

The intelligence comes from combination of things. If we look at subsets of different parts, we'll never find it. My guess.Mightysword wrote:Gotta say, this is probably the single most convincing reason I've ever heard against having AI anytime soon. Simple, but can't be any more truepjknibbs wrote: We don't really understand how a bunch of neurons creates intelligence and consciousness in ourselves, how are we supposed to create something similar?

So, we 100% can make something that appears to us as intelligent as us.

And, if we make them right, they'll improve our lives.

- Stars_InTheirEyes

- Posts: 5086

- Joined: Tue, 9. Jan 07, 22:04

Talking about transferring consciousnesses; I would much rather we figure out how to do that for ourselves first. It might even be easier than creating new consciousnesses.

Thinking Asgard from SG-1 here. Make clones, transfer to one when current is in a spot of bother (cancer and whatnot). Although I suppose a clone would be a copy and the same age? So it wouldn't get around the whole 'dying of age' fiasco.

Figure that out and we dont need AI to go on long space trips for us.

Thinking Asgard from SG-1 here. Make clones, transfer to one when current is in a spot of bother (cancer and whatnot). Although I suppose a clone would be a copy and the same age? So it wouldn't get around the whole 'dying of age' fiasco.

Figure that out and we dont need AI to go on long space trips for us.

Sometimes I stream stuff: https://www.twitch.tv/sorata77 (currently World of Tanks)

This sı not ǝpısdn down.

MyAnimeList,

Steam: Sorata

This sı not ǝpısdn down.

MyAnimeList,

Steam: Sorata

A clone is created from your DNA, it doesn't have to be the same age as the original. The main issue with cloning (once we figure out how to do it reliably, and we're not quite there yet) is that imperfections in the copy process build up over time until the point where the clone is no longer viable, but you'd probably be able to get a few regular lifetimes before that was an issue.Stars_InTheirEyes wrote:Although I suppose a clone would be a copy and the same age?

Re: What’s your feeling about AI and galactic exploitation/colonisation?

I doubt that, for two reasons.fiksal wrote: Now you are talking about morality and self preservation. Even most intelligent AI can be made without either of those things.

First that you'd want an AI without a sense of self preservation. And secondly. You can't teach something what is good without also, intentionally or not teaching them what is bad.

We already have this problem with Neural networks, have had for decades.

Talking of AI's that would take us to the stars though:

An intelligence aware of the need to preserve life, (including its own), and why it should, will always know that it is possible to not preserve that life, and this is not a thing we could just control easily.

The idea that we cold write some code that would somehow prevent them deciding to do this is unfortunately nonsense. The only option is that they be smart enough to choose not to themselves, that would need some form of morality, surely.

Just say the AI had to choose between losing half its cargo of frozen humans, or killing itself?

The smartest choice wold be to kill the humans, because without the AI, the rest would almost certainly die, but a hard coded command might tell the AI not to do this.

It's just not so simple. If we didn't want AI involved we'd just go for frozen humans blind fired into the cosmos and expect a 99.999% failure rate.

Intelligence is a black box, we don't really understand it. If we ever do it won't be for a long time.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Re: What’s your feeling about AI and galactic exploitation/colonisation?

Our sample size of intelligently developed technologies is incredibly small. In fact, it can not get any smaller... We have a conceptual, only a conceptual, understanding of "outer space." Based on that, we have developed propulsion technologies that rely only on the most basic Newtonian principles. Beyond that, we have some ideas how more advanced propulsion that doesn't involve "pushing stuff" in order to go somewhere.mrbadger wrote:...This is why I have no truck with these UFO people, they simply have no concept of the scale of space. But that’s another thing.

I'm not saying anything about "UFOs." I see no evidence for them as popularly understood. (Space alienz zomgz!) But, what I am saying is that we haven't the knowledge or experience to say that something "can't be done." Making that sort of declaration is the height of hubris and is simply avoiding the question, entirely.

Maybe... One of the key conversation points revolving around the development of true A.I. is "How do we ensure an A.I. has the same value systems as we do?"Best case? We head out into the galaxy alongside AI.

A true A.I. may not want to explore. And, if physical law is the same throughout the universe, then perhaps it won't need to explore so long as it has enough resources where it is. After all, an A.I. that is capable of altering itself, which is what many think is the only real route to a true A.I., will very quickly exceed human-levels of understanding. It could easily "guess", given enough information, what lies at any destination chosen, no need to go there. But, there's no reason to expect it would care, really.

In short: We care about exploring things. An A.I. may not. The only way we'd ever have R2D2 and C3PO happily accompanying us on a journey of exploration for exploration's sake is if we somehow built in a value system that encourages "exploration." And, for an A.I. that can literally build itself, it may decide that "value" is not worth retaining.

Worst case? The worst case is that an A.I. would seek to destroy us because we represent a threat. Or, perhaps more insulting, an A.I. may disregard anything having to do with us and go about fulfilling whatever goals it determines are valuable to it. Along the way, it runs over us and we get smushed, like a beetle crossing a road that has the unfortunate fate of meeting an automobile driven by an uncaring, unconcerned human.Worst case? We find our solar system quarantined for a few millennia by those same AI with periodic review visits until we’ve proved we can grow up as a species and not be a danger.

On "true A.I." independent exploration: I'm against it. Why? Xenon, that's why.

On partnering with A.I.: It will happen and will happen, at least in its infancy, pretty soon. (relatively speaking) Those who think it won't don't understand what's going on in A.I. development, today. Those who successfully create A.I. will... win. They'll win everything. Whether it's an "Oracle" A.I. that they simply get answers from or one that is more interactive and general, the appearance of true A.I. changes the game for everyone. Nations capable of doing so are moving full-steam towards investigating this possibility and there's a large number of independent concerns pursuing it.

There's no reason to assume that our meat-brains have anything critical to do with developing "consciousness." We don't need to understand our own brains in order to create A.I. unless we're trying to reproduce our brains. And, it's already a given that reproducing the human brain in machine or conceptual form is not necessary for A.I.pjknibbs wrote:...

Overall, I'm not even convinced we'll create true AI anytime soon. We don't really understand how a bunch of neurons creates intelligence and consciousness in ourselves, how are we supposed to create something similar?

Keep in mind that what we determine as "intelligence" or "concsiousness" is "assumed." We have a set of conditions we believe is associated with consciousness. We apply these empathically as human beings one to another without any further evidence that they are present than the basic empathic assumptions of one human attempting to understand the internalized thoughts of another. Our only evidence for "consciousness" existing is... anecdotal. It's "behavior" and if it looks like a duck, walks like a duck, and quacks like a duck, it's "conscious." So, in the end, if we develop something that we determine is an "A.I.", then it is, no matter what it's constructed of, since it's evidenced behavior is how we make that judgement.

Note: For further reading, those who are interested: "Superintelligence: Paths, Dangers, Strategies" - Nick Bostrum (A friggin outstanding, wonderful, read)

PS - I have a history of bloviating on this subject. I will attempt restraint.

just bought book that this morning on Audible, haven't listened to it yet, better be good.

AI research was held back decades by one US funding professor who thought the Neuron approach was a dead end, because there was no solution to the Xor problem. He was a bit wrong there. But are Neurons the only approach?

Probably not. I wrote a non AI machine learning data structure that outperformed their discrimination abilities in my doctoral thesis, and they are being outstripped in lots of other area's as well already.

As for us not knowing all possible ways to traverse space. Well I'll agree with that. How could we?

AI research was held back decades by one US funding professor who thought the Neuron approach was a dead end, because there was no solution to the Xor problem. He was a bit wrong there. But are Neurons the only approach?

Probably not. I wrote a non AI machine learning data structure that outperformed their discrimination abilities in my doctoral thesis, and they are being outstripped in lots of other area's as well already.

As for us not knowing all possible ways to traverse space. Well I'll agree with that. How could we?

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

You'll be able to appreciate it. Though, I would have suggested the physical copy as there are a number of bullet-point chapters and that sort of organization helps one get through the topic.mrbadger wrote:just bought book that this morning on Audible, haven't listened to it yet, better be good.

No. I think it's a sort of "dead" subject, as if by magic people thought a consciousness can be created by "add meatbrain-simulation.".. But are Neurons the only approach?

One bit of magic dust that's being chased, I think, is the age-old "ops per second." Unsurprisingly, companies specializing in such things are also pursuing A.I. 1 A "throw more power at it" approach appears necessary. However, the underlying hope is that once enough operations are possible to actually lead to a basic "dumb" A.I., that A.I. will be able to assist in developing even better hardware and software routines, essentially helping to build "true A.I."Probably not. I wrote a non AI machine learning data structure that outperformed their discrimination abilities in my doctoral thesis, and they are being outstripped in lots of other area's as well already.

Imagine the company that achieves "dumb A.I." and successfully manages to create one that uses its power to design new chips and new code... A dumb A.I. that can run more flops per flop than anything out there designs hardware that makes a logarithmic improvement over existing hardware that, in turn, gives rise to A.I. that expands upon that, yet again... and again... and again.

That's the "dream and the danger." Ultimately, if all this is truly possible, we're talking about giving birth to something with a godlike intellect and capacity for reasoning that, guided by a true consciousness, is more capable than any number of human meat-brains. No puny "human-thunked" safeguards are possible, here, unless it's completely isolated. Even then, how do you constrain a demi-god? That's a problem people are truly working on, right now. Ensuring a compatible value-system is a "first-stage" sort of solution, one that is hoped the A.I. will naturally support. But, if not?

If we don't get it right... "Oops" is a poor inscription for our race's tombstone.

Mrbadger, I was thinking that you'd be the person to answer this.

Bloom's Taxonomy identifies the hierarchy of thinking skills thus:

Analysing, Evaluating, Creating (note: All three considered at same level).

Applying

Understanding

Remembering

This is my understanding of the state of play - is it right?

Analysing, Evaluating, Creating - Not a chance in hell, no matter how powerful your computer is.

Applying - With a REALLY good algorithm, a powerful computer can manage to look like it's doing this. Just.

Understanding - With a good algorithm, a computer can convincingly simulate this.

Remembering - Computers already do this better than humans.

Bloom's Taxonomy identifies the hierarchy of thinking skills thus:

Analysing, Evaluating, Creating (note: All three considered at same level).

Applying

Understanding

Remembering

This is my understanding of the state of play - is it right?

Analysing, Evaluating, Creating - Not a chance in hell, no matter how powerful your computer is.

Applying - With a REALLY good algorithm, a powerful computer can manage to look like it's doing this. Just.

Understanding - With a good algorithm, a computer can convincingly simulate this.

Remembering - Computers already do this better than humans.

Morkonan wrote:What really happened isn't as exciting. Putin flexed his left thigh during his morning ride on a flying bear, right after beating fifty Judo blackbelts, which he does upon rising every morning. (Not that Putin sleeps, it's just that he doesn't want to make others feel inadequate.)

Computers as they are? yes.Usenko wrote: Analysing, Evaluating, Creating - Not a chance in hell, no matter how powerful your computer is.

Applying - With a REALLY good algorithm, a powerful computer can manage to look like it's doing this. Just.

Understanding - With a good algorithm, a computer can convincingly simulate this.

Remembering - Computers already do this better than humans.

Computers as they are will never manage to attain true AI, because as you point out, using good old Bloom, they can't do the analyze, valuate, create part, so they couldn't ever reach true sentience.

At that point they wouldn't be A.I anyway, they'd just be intelligences that were different.

For the same reason you couldn't copy a human mind into a computer and expect that to work, because it would be unable to function. The hardware couldn't operate fast enough, or do the right things.

The level of parallelism in the human brain is staggering, beyond description levels of staggering. We persist in comparing it to computers, but this comparison is meaningless.

Maybe with quantum computing we could get closer to something that could cross that line, but with silicon? Nope.

An AI might make use of Silicon based computers, in much the same way we do, and still might not like us mistreating what it sees as its primitive forebears. My concern would be more if we mistreated computers that actually could cross that line to true AI.

We after all don't all like how some animals are treated, but we don't call them all sentient.

But that doesn't stop us using them, owning them etc.

side note:

Elon Musk, bless 'im, is idealistic, but he's also rich and powerful, and I'm afraid surrounded by too many people who dare not disagree with him, because he's a bit off on this subject. We are not about to be taken over by an imminent singularity. Not when we still can't make a sodding smart phone that can last a day without recharging. I do get irritated by his thoughts in this area.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

-

Mightysword

- Posts: 4350

- Joined: Wed, 10. Mar 04, 05:11

Re: What’s your feeling about AI and galactic exploitation/colonisation?

That's exactly the main reason: what define intelligent? Computational power? Some tend to think the computer is already better at us than this but not quite, it's better at "some" logical computation, not even all. And in some other routine we still pretty much leave any computer in the dust. I don't think computer is cable of having an apple dropped on its head and then question the existence of gravity!fiksal wrote: The intelligence comes from combination of things. If we look at subsets of different parts, we'll never find it. My guess.

One fascinating part of our brain is how we record, store, and recall information. I don't believe computer will ever match that in any forseenable future.

And we have been, but I would call them nothing more than expert systems, and that's a far cry from what most people would envison an AI would be.And, if we make them right, they'll improve our lives.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

Experts systems can't know anything they haven't already been given the answer to. So while they can seem smart, they are ultimately a dead end, and heven't been part of cutting edge AI research for a long time.Mightysword wrote:

And we have been, but I would call them nothing more than expert systems, and that's a far cry from what most people would envison an AI would be.

That's not to say they don't have application in specialized area's however. On board system on a fighter jet for instance might be able to make use of one, or even something as mundane as car engine control systems. One handy feature they have is that they are fast.

(I'm getting flashbacks to the lecture where I was first introduced to expert systems and was convinced I would never understand them)

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Re: What’s your feeling about AI and galactic exploitation/colonisation?

@Stars_InTheirEyes

I think the morality and self preservation depends solely on the purpose of AI, and the machine/metal it runs on. Many (if not all) machines are expandable.

Perhaps because we have nothing to compare with, being the smartest things on this planet.

One one hand, I think computers will never think like us. They are very good at what they do, much better than us. But they can think close enough that we won't be able to tell the difference. Will it mean they'll think like us then? We wouldnt be able to tell.

On the other hand, the computers were built on the concepts that we invented, using the ideas of how we see the world. It's also possible the ideas of logic and determinism are coming straight from our hardware. Perhaps when we invent computers 2.0 that are quite as deterministic as these, we can make something that truelly thinks like us. I am not sure that's practical however.

I was going to say pretty much the same think. Copying a human or its mind is pretty cool, unfortunately it does nothing to prolong my own life.pjknibbs wrote:A clone is created from your DNA, it doesn't have to be the same age as the original. The main issue with cloning (once we figure out how to do it reliably, and we're not quite there yet) is that imperfections in the copy process build up over time until the point where the clone is no longer viable, but you'd probably be able to get a few regular lifetimes before that was an issue.Stars_InTheirEyes wrote:Although I suppose a clone would be a copy and the same age?

I think my view of AI is a bit more.... "metal". I see AI the same way I see calculators. We can build them any way we want, as intelligent as we want with whatever restrictions we want. We have absolute control over everything they do.mrbadger wrote:I doubt that, for two reasons.fiksal wrote: Now you are talking about morality and self preservation. Even most intelligent AI can be made without either of those things.

First that you'd want an AI without a sense of self preservation. And secondly. You can't teach something what is good without also, intentionally or not teaching them what is bad.

I think the morality and self preservation depends solely on the purpose of AI, and the machine/metal it runs on. Many (if not all) machines are expandable.

Which problem?mrbadger wrote: We already have this problem with Neural networks, have had for decades.

Ah yeah, the dreaded example of AI choosing who lives and who dies. I say it should defer to a human decision and the element of human responsibility should exist in that decision.mrbadger wrote: Just say the AI had to choose between losing half its cargo of frozen humans, or killing itself?

The smartest choice wold be to kill the humans, because without the AI, the rest would almost certainly die, but a hard coded command might tell the AI not to do this.

Yep. Though, is it possible we are putting too much meaning into this word?mrbadger wrote: Intelligence is a black box, we don't really understand it. If we ever do it won't be for a long time.

Perhaps because we have nothing to compare with, being the smartest things on this planet.

Yep, all interesting questions.Mightysword wrote:That's exactly the main reason: what define intelligent? Computational power? Some tend to think the computer is already better at us than this but not quite, it's better at "some" logical computation, not even all. And in some other routine we still pretty much leave any computer in the dust. I don't think computer is cable of having an apple dropped on its head and then question the existence of gravity!fiksal wrote: The intelligence comes from combination of things. If we look at subsets of different parts, we'll never find it. My guess.

One fascinating part of our brain is how we record, store, and recall information. I don't believe computer will ever match that in any forseenable future.

One one hand, I think computers will never think like us. They are very good at what they do, much better than us. But they can think close enough that we won't be able to tell the difference. Will it mean they'll think like us then? We wouldnt be able to tell.

On the other hand, the computers were built on the concepts that we invented, using the ideas of how we see the world. It's also possible the ideas of logic and determinism are coming straight from our hardware. Perhaps when we invent computers 2.0 that are quite as deterministic as these, we can make something that truelly thinks like us. I am not sure that's practical however.

I wonder what people actually want.Mightysword wrote:And we have been, but I would call them nothing more than expert systems, and that's a far cry from what most people would envison an AI would be.And, if we make them right, they'll improve our lives.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

I didn't actually say anything like that? However, what you're getting at there is the old philosophical issue of "If I copy myself, is the copy still me?" which also comes up when talking about teleportation. Reminds me of the film "The Prestige" where a magician makes (and murders) dozens of copies of himself as part of a magic trick, and when he's talking about it near the end he says that, every time he did the trick, he wondered if he'd be the one doing the bow to the audience or the one drowning in a tank under the stage...fiksal wrote: I was going to say pretty much the same think. Copying a human or its mind is pretty cool, unfortunately it does nothing to prolong my own life.

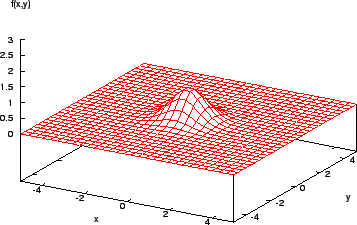

I’ll try to demonstrate using images nicked from elsewhere on the interwebsfiksal wrote:Which problem?mrbadger wrote: We already have this problem with Neural networks, have had for decades.

Ok, you teach a Neural net to understand one thing, lets simplify and say you have taught it to understand what red is (the images are red I see, this is pure chance, I just realised and I'm not re-writing my post).

So you get a peak in your network that represents this

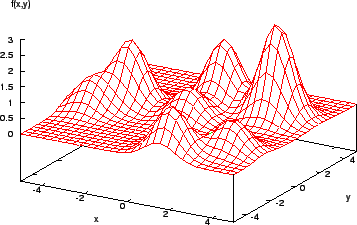

Only trouble is, you have also taught it what ‘not red’ is, and this is also represented somewhere in the network topology as a trough (an inverted peak). Neither the positive or negative result are clean peaks or troughs, that’s just a nice way to think of it. The only Neural Network that this they are really peaks/troughs in is the 2d Kahonen Network, and that’s too simple for any complex concept (still very useful though).

Teach it more than one thing and your network starts to get more like this

Rather more complex and messy. The chances of your network landing on a false positive has increased greatly. So the likelihood of your network making a bad choice (thinking ‘blue’ instead of ‘red’, or ‘kill’ instead of ‘save’,) while remote, exists, and cannot be removed, because you cannot reach into a network and remove these bad options without disrupting/destroying what the network has learned, even if you could find them, which you can’t.

Does that help?

Less easy to achieve if that decision is required tens of thousands of years into a journey between galaxies, which is the sort of timescale we’d be looking at.fiksal wrote: Ah yeah, the dreaded example of AI choosing who lives and who dies. I say it should defer to a human decision and the element of human responsibility should exist in that decision.

We might even be looking at on journeys pretty darned long around our own galaxy really. You just can’t assume a person would be around to make the choice.

If it were possible it would be a pretty lonely task.

Intelligence is relative. A Lion is as smart as it needs to be to be a Lion, A Dolphin is well adapted to it’s environment (I hesitate to say perfectly), Same for birds. Most creatures have the appropriate level of brains they require to be what they are.fiksal wrote:Yep. Though, is it possible we are putting too much meaning into this word?mrbadger wrote: Intelligence is a black box, we don't really understand it. If we ever do it won't be for a long time.

Perhaps because we have nothing to compare with, being the smartest things on this planet.

How smart does an AI need to be? Has anyone actually asked this question? Distributed processing is a thing, but that has application only for certain tasks, not for defining individuals.

They won’t think like us, because our brains evolved to solve very different problems to the ones they are tackling now. An AI need not concern itself with quickly identifying a predator in the underbrush or living the life of a hunter gatherer (which is what we are optimised for).fiksal wrote: One one hand, I think computers will never think like us. They are very good at what they do, much better than us. But they can think close enough that we won't be able to tell the difference. Will it mean they'll think like us then? We wouldnt be able to tell.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Dunno what these are in terms of "thinking." I did Google "Bloom's Taxonomy" and I guess it's a popular instructional aid for teaching.Usenko wrote:...Analysing, Evaluating, Creating - Not a chance in hell, no matter how powerful your computer is.

Applying - With a REALLY good algorithm, a powerful computer can manage to look like it's doing this. Just.

Understanding - With a good algorithm, a computer can convincingly simulate this.

Remembering - Computers already do this better than humans.

But, it's not evidence of consciousness nor are these things that are not already done today with computers. Computers, from games to the stock market, utilize processes emulate these behaviors.

As far as "Creating" computers do that every day. As far more human endeavors, computers write books, music and create art. Are they not "creating" just because... they're a computer? What is evidence of "understanding?"

Computers don't have any juices... They have no hormones that both prompt and respond to thought. Their sources of input and output are severely limited compared to even the most simple animal. Does a computer have a subconscious-like component? Can it surprise itself? Does a computer that "knows" it's own IP and MAC addresses show evidence of identifying itself as unique? Can it empathize with other computers and, if it's analyzing one, is that empathy?

The point is we have a horrible tendency to define "human things" in ways that are only easily describable from a human point of view, requiring a human interpreter in order to understand.

If a computer is given a set of instructions it then uses in a program that enables it to paint art, compose music or write a story, where is that different from a human being going to school in order to receive a Master of Fine Arts degree? And, if it combines these instructions in a novel way? What if it invents its own "style?"

At the end of the day, aside from the most basic principles of cognition, simple human terms that describe human sentience are not going to be enough.

Gezundherveltdischniche - That may be a German curse word, I dunno... I just invented it.

We h ave been trying to understand "self" for as long as the record of human history tells us we've been keeping records of human history... At best, we've established the equivalent of a small sports stadium filled with choices from which we tend to agree are valid bases for arguments about "self." A true AI may come to a meaningful, correct, definition for itself in the space of a few hours, minutes or seconds, depending on how quickly it's capable of pursuing such a thing. And, it may never come to such a realization if it doesn't believe seeking it is useful.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

I don't see how we can be talking about the 'mind' of a machine, when we haven't the faintest idea how the mind works - or if there is such a thing and whether it is even physical. Our understanding of consciousness is almost non-existent. Sure, we will continue developing smart machines in the form of artificial intelligence, but that is a universe apart from any kind of machine sentience.mrbadger wrote:I mean more that there will come a time when we’ll have to stop ending our space missions by destroying the robotic probes used to carry them out without retrieving the mind inside as they get more advanced.

It won’t be too long before that will start to look a lot like murder.

Granted they aren’t too advanced right now, but it won’t be long before they are.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

Observe wrote:A wheel rolls.mrbadger wrote:I don't see how we can be talking about the 'mind' of a machine, when we haven't the faintest idea how the mind works - or if there is such a thing and whether it is even physical. Our understanding of consciousness is almost non-existent. Sure, we will continue developing smart machines in the form of artificial intelligence, but that is a universe apart from any kind of machine sentience.

That's an incredibly complex, difficult, thing to actually comprehend. It's on the order of "A pendulum swings" kinda difficult.

But, we can use wheels just fine and we don't need to completely comprehend them in order to do so.

In my opinion, fully comprehending our own consciousness is not necessary for the development of "AI." We'll leave a true AI to define its own "consciousness" for itself, when we finally get there.

As far as sentience or consciousness is concerned, we know it exists because we have behavior that evidences it. We can also observe some of the physical processes that we can only anecdotally assume indicate "consciousness." We can't "measure" consciousness in any meaningful way other than to make some broad assumptions when watching something like an FMRI. And, that's simply by some fairly broad associations. Even with special tools that have allowed a limited form of "peeking" into another person's "thoughts" (visual imagery extracted) we can't know or measure the "experience" of consciousness.

IOW - While using such knowledge as a tool and as a help in the path forward, applying it directly to interpreting some value of "consciousness" to an AI is not likely to be something we can accomplish. At best, we can measure the results and the behaviors of an AI.

Note: I will, however, say that if we have true unfettered access to an AI and can, without the need for interpretation, determine what it is thinking and how it is thinking it... It may shed some light on our own consciousness. I'd also like to point out that if we can do such a thing and detect a truly novel operation, one that is "greater than the sum of its parts", we may have much bigger questions to ask than what it's thinking about...