I didn't actually say anything like that? However, what you're getting at there is the old philosophical issue of "If I copy myself, is the copy still me?" which also comes up when talking about teleportation. Reminds me of the film "The Prestige" where a magician makes (and murders) dozens of copies of himself as part of a magic trick, and when he's talking about it near the end he says that, every time he did the trick, he wondered if he'd be the one doing the bow to the audience or the one drowning in a tank under the stage...fiksal wrote: I was going to say pretty much the same think. Copying a human or its mind is pretty cool, unfortunately it does nothing to prolong my own life.

What’s your feeling about AI and galactic exploitation/colonisation?

Moderator: Moderators for English X Forum

Re: What’s your feeling about AI and galactic exploitation/colonisation?

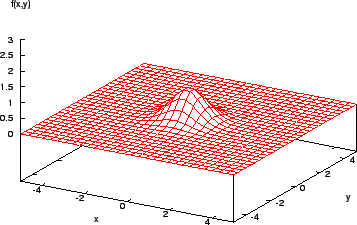

I’ll try to demonstrate using images nicked from elsewhere on the interwebsfiksal wrote:Which problem?mrbadger wrote: We already have this problem with Neural networks, have had for decades.

Ok, you teach a Neural net to understand one thing, lets simplify and say you have taught it to understand what red is (the images are red I see, this is pure chance, I just realised and I'm not re-writing my post).

So you get a peak in your network that represents this

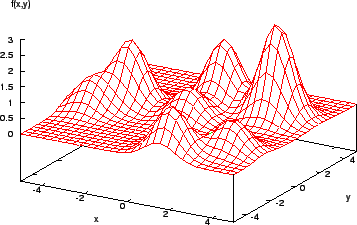

Only trouble is, you have also taught it what ‘not red’ is, and this is also represented somewhere in the network topology as a trough (an inverted peak). Neither the positive or negative result are clean peaks or troughs, that’s just a nice way to think of it. The only Neural Network that this they are really peaks/troughs in is the 2d Kahonen Network, and that’s too simple for any complex concept (still very useful though).

Teach it more than one thing and your network starts to get more like this

Rather more complex and messy. The chances of your network landing on a false positive has increased greatly. So the likelihood of your network making a bad choice (thinking ‘blue’ instead of ‘red’, or ‘kill’ instead of ‘save’,) while remote, exists, and cannot be removed, because you cannot reach into a network and remove these bad options without disrupting/destroying what the network has learned, even if you could find them, which you can’t.

Does that help?

Less easy to achieve if that decision is required tens of thousands of years into a journey between galaxies, which is the sort of timescale we’d be looking at.fiksal wrote: Ah yeah, the dreaded example of AI choosing who lives and who dies. I say it should defer to a human decision and the element of human responsibility should exist in that decision.

We might even be looking at on journeys pretty darned long around our own galaxy really. You just can’t assume a person would be around to make the choice.

If it were possible it would be a pretty lonely task.

Intelligence is relative. A Lion is as smart as it needs to be to be a Lion, A Dolphin is well adapted to it’s environment (I hesitate to say perfectly), Same for birds. Most creatures have the appropriate level of brains they require to be what they are.fiksal wrote:Yep. Though, is it possible we are putting too much meaning into this word?mrbadger wrote: Intelligence is a black box, we don't really understand it. If we ever do it won't be for a long time.

Perhaps because we have nothing to compare with, being the smartest things on this planet.

How smart does an AI need to be? Has anyone actually asked this question? Distributed processing is a thing, but that has application only for certain tasks, not for defining individuals.

They won’t think like us, because our brains evolved to solve very different problems to the ones they are tackling now. An AI need not concern itself with quickly identifying a predator in the underbrush or living the life of a hunter gatherer (which is what we are optimised for).fiksal wrote: One one hand, I think computers will never think like us. They are very good at what they do, much better than us. But they can think close enough that we won't be able to tell the difference. Will it mean they'll think like us then? We wouldnt be able to tell.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Dunno what these are in terms of "thinking." I did Google "Bloom's Taxonomy" and I guess it's a popular instructional aid for teaching.Usenko wrote:...Analysing, Evaluating, Creating - Not a chance in hell, no matter how powerful your computer is.

Applying - With a REALLY good algorithm, a powerful computer can manage to look like it's doing this. Just.

Understanding - With a good algorithm, a computer can convincingly simulate this.

Remembering - Computers already do this better than humans.

But, it's not evidence of consciousness nor are these things that are not already done today with computers. Computers, from games to the stock market, utilize processes emulate these behaviors.

As far as "Creating" computers do that every day. As far more human endeavors, computers write books, music and create art. Are they not "creating" just because... they're a computer? What is evidence of "understanding?"

Computers don't have any juices... They have no hormones that both prompt and respond to thought. Their sources of input and output are severely limited compared to even the most simple animal. Does a computer have a subconscious-like component? Can it surprise itself? Does a computer that "knows" it's own IP and MAC addresses show evidence of identifying itself as unique? Can it empathize with other computers and, if it's analyzing one, is that empathy?

The point is we have a horrible tendency to define "human things" in ways that are only easily describable from a human point of view, requiring a human interpreter in order to understand.

If a computer is given a set of instructions it then uses in a program that enables it to paint art, compose music or write a story, where is that different from a human being going to school in order to receive a Master of Fine Arts degree? And, if it combines these instructions in a novel way? What if it invents its own "style?"

At the end of the day, aside from the most basic principles of cognition, simple human terms that describe human sentience are not going to be enough.

Gezundherveltdischniche - That may be a German curse word, I dunno... I just invented it.

We h ave been trying to understand "self" for as long as the record of human history tells us we've been keeping records of human history... At best, we've established the equivalent of a small sports stadium filled with choices from which we tend to agree are valid bases for arguments about "self." A true AI may come to a meaningful, correct, definition for itself in the space of a few hours, minutes or seconds, depending on how quickly it's capable of pursuing such a thing. And, it may never come to such a realization if it doesn't believe seeking it is useful.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

I don't see how we can be talking about the 'mind' of a machine, when we haven't the faintest idea how the mind works - or if there is such a thing and whether it is even physical. Our understanding of consciousness is almost non-existent. Sure, we will continue developing smart machines in the form of artificial intelligence, but that is a universe apart from any kind of machine sentience.mrbadger wrote:I mean more that there will come a time when we’ll have to stop ending our space missions by destroying the robotic probes used to carry them out without retrieving the mind inside as they get more advanced.

It won’t be too long before that will start to look a lot like murder.

Granted they aren’t too advanced right now, but it won’t be long before they are.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

Observe wrote:A wheel rolls.mrbadger wrote:I don't see how we can be talking about the 'mind' of a machine, when we haven't the faintest idea how the mind works - or if there is such a thing and whether it is even physical. Our understanding of consciousness is almost non-existent. Sure, we will continue developing smart machines in the form of artificial intelligence, but that is a universe apart from any kind of machine sentience.

That's an incredibly complex, difficult, thing to actually comprehend. It's on the order of "A pendulum swings" kinda difficult.

But, we can use wheels just fine and we don't need to completely comprehend them in order to do so.

In my opinion, fully comprehending our own consciousness is not necessary for the development of "AI." We'll leave a true AI to define its own "consciousness" for itself, when we finally get there.

As far as sentience or consciousness is concerned, we know it exists because we have behavior that evidences it. We can also observe some of the physical processes that we can only anecdotally assume indicate "consciousness." We can't "measure" consciousness in any meaningful way other than to make some broad assumptions when watching something like an FMRI. And, that's simply by some fairly broad associations. Even with special tools that have allowed a limited form of "peeking" into another person's "thoughts" (visual imagery extracted) we can't know or measure the "experience" of consciousness.

IOW - While using such knowledge as a tool and as a help in the path forward, applying it directly to interpreting some value of "consciousness" to an AI is not likely to be something we can accomplish. At best, we can measure the results and the behaviors of an AI.

Note: I will, however, say that if we have true unfettered access to an AI and can, without the need for interpretation, determine what it is thinking and how it is thinking it... It may shed some light on our own consciousness. I'd also like to point out that if we can do such a thing and detect a truly novel operation, one that is "greater than the sum of its parts", we may have much bigger questions to ask than what it's thinking about...

Re: What’s your feeling about AI and galactic exploitation/colonisation?

I've started with your thought and took a few leaps forward.pjknibbs wrote:I didn't actually say anything like that? However, what you're getting at there is the old philosophical issue of "If I copy myself, is the copy still me?" which also comes up when talking about teleportation. Reminds me of the film "The Prestige" where a magician makes (and murders) dozens of copies of himself as part of a magic trick, and when he's talking about it near the end he says that, every time he did the trick, he wondered if he'd be the one doing the bow to the audience or the one drowning in a tank under the stage...fiksal wrote: I was going to say pretty much the same think. Copying a human or its mind is pretty cool, unfortunately it does nothing to prolong my own life.

It does help.mrbadger wrote: I’ll try to demonstrate using images nicked from elsewhere on the interwebs

So in neural network, it's made to identify something that you taught but may make a greater mistake about things that you didnt teach it about? Especially when the concepts cross over?

I see what you mean about teaching good vs bad then.

Best then work out these possible decisions in testing, to make sure AI will behave predictably. Such that, if a group of people will be flushed into space, that group of people understands the risks (that they might be the ones flushed).mrbadger wrote:Less easy to achieve if that decision is required tens of thousands of years into a journey between galaxies, which is the sort of timescale we’d be looking at.fiksal wrote: Ah yeah, the dreaded example of AI choosing who lives and who dies. I say it should defer to a human decision and the element of human responsibility should exist in that decision.

We might even be looking at on journeys pretty darned long around our own galaxy really. You just can’t assume a person would be around to make the choice.

If it were possible it would be a pretty lonely task.

Oh I agree. Maybe we shouldnt concentrate on making AI human-like. And just make they are the best machines and calculators.mrbadger wrote: Intelligence is relative. A Lion is as smart as it needs to be to be a Lion, A Dolphin is well adapted to it’s environment (I hesitate to say perfectly), Same for birds. Most creatures have the appropriate level of brains they require to be what they are.

How smart does an AI need to be? Has anyone actually asked this question? Distributed processing is a thing, but that has application only for certain tasks, not for defining individuals.

We have evolved though to be good problem solvers. At the very least, I'd like for AI to get there too.mrbadger wrote:They won’t think like us, because our brains evolved to solve very different problems to the ones they are tackling now. An AI need not concern itself with quickly identifying a predator in the underbrush or living the life of a hunter gatherer (which is what we are optimised for).fiksal wrote: One one hand, I think computers will never think like us. They are very good at what they do, much better than us. But they can think close enough that we won't be able to tell the difference. Will it mean they'll think like us then? We wouldnt be able to tell.

Last edited by fiksal on Tue, 7. Nov 17, 00:01, edited 1 time in total.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

So that final bit of discrimination, where sometime even our minds fail (psychopathy and other things we group under the blanket term insanity, and we are no where near fixing in ourselves, or understanding), is the bit where we say 'yes, I know how to do the wrong thing here, but I won't, because I choose not to'. Requires a bit of creativity, independence of thought.fiksal wrote: So in neural network, it's made to identify something that you taught but may make a greater mistake about things that you didnt teach it about? Especially when the concepts cross over?

I see what you mean about teaching good

Without that ability to non blindly choose not to, you would create an automatic psychopath in waiting that had no idea it was a psychopath.

So it might select what was, to it, a perfectly reasonable option, but to us that choice might be monstrous (plenty of humans have made such choices)

This is often explored in SF, and for story's sake, easily fixed, defeated, or just mcguffined around. In reality it wouldn't be so easy to fix.

If we insisted on human like AI that is.

If instead we allowed a different form of AI that maybe we didn't completely understand, it might be these issues wouldn't be there.

They are it seems mostly the result of meat wiring issues.

I might be discoursing from my rear on this last aspect however.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

In an effort to make a post that's "On-Topic:"

What are people's opinions on one of the proposed means for traveling long distances -

Given the literally astronomically long travel times we believe are inevitable, what are you thoughts concerning Human's being either "constructed" from DNA patterns or eggs being "incubated" and the resulting offspring being taught by AI, all once the vessel has reached a suitable destination?

(Some science-fiction books have attempted to explore such a scenario. One that comes to mind, though not completely echoing this strategy, is "Anvil of Stars" by Greg Bear. Incidentally, it and it's precursor, "The Forge of God" have both been optioned for movies and a screenplay is in the works. If you've not read them, you need to - Their friggin' awesome books by one of the best of "The Killer Bees.")

What are people's opinions on one of the proposed means for traveling long distances -

Given the literally astronomically long travel times we believe are inevitable, what are you thoughts concerning Human's being either "constructed" from DNA patterns or eggs being "incubated" and the resulting offspring being taught by AI, all once the vessel has reached a suitable destination?

(Some science-fiction books have attempted to explore such a scenario. One that comes to mind, though not completely echoing this strategy, is "Anvil of Stars" by Greg Bear. Incidentally, it and it's precursor, "The Forge of God" have both been optioned for movies and a screenplay is in the works. If you've not read them, you need to - Their friggin' awesome books by one of the best of "The Killer Bees.")

I see no reason why colonising humans couldn't just be recreated from digitally stored DNA.

It would be a lot less prone to injury/mutation by cosmic rays for a start. The longer the journey, the greater the chance of damage to physical DNA, however stored, to the point that the likelihood of damage approaches unity, and you are left only with how severe that damage will be.

There might be some theological arguments against it, but a pattern is just that, a pattern. We can already record, store and recreate DNA.

A human created from digitally stored DNA would be identical in every respect to one created naturally. Except possibly less prone to being produced from damaged source DNA.

It would be a lot less prone to injury/mutation by cosmic rays for a start. The longer the journey, the greater the chance of damage to physical DNA, however stored, to the point that the likelihood of damage approaches unity, and you are left only with how severe that damage will be.

There might be some theological arguments against it, but a pattern is just that, a pattern. We can already record, store and recreate DNA.

A human created from digitally stored DNA would be identical in every respect to one created naturally. Except possibly less prone to being produced from damaged source DNA.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

- BigBANGtheory

- Posts: 3168

- Joined: Sun, 23. Oct 05, 12:13

But, are they... "human." What about our culture, values, our social arrangements, etc? Can an "AI" teach those? At least, an AI we can imagine and isn't drawing too heavily on "magic" to make it work in such a theorycraft discussion.mrbadger wrote:...A human created from digitally stored DNA would be identical in every respect to one created naturally. Except possibly less prone to being produced from damaged source DNA.

As far as species survival goes, I don't really care about that stuff and would be fine with knowing that, at least, we're continuing our species in some way. But, if those humans eventually developed into evil, nasty, dark-lords that murdered every space-puppy they could find? I probably wouldn't be very enthusiastic about contributing to that. And, most people are hesitant to abandon the idea of sacrificing our "heritage and culture" in such a way.

So, in the end... What do we tell the AI to teach these new humans? Someone has to make a list of things they should be taught and who is that going to be? Who chooses what bits of our culture survive and what doesn't?

Unskilled labor always has problems finding lucrative employment.BigBANGtheory wrote:AI will fore fill 30% of the workforce in the next 20yrs, that's great if you are a consumer or in tech but not so great for transaction and back office workers.

But, one of the assumed benefits of "freeing up the workforce" is that efficiency will be so great that costs will come down and production will rise, making most commodities and basic products so easily available that people will have a great deal more time for pursuing things other than basic subsistence priorities.

That's one of the arguments in favor of this sort of progress. Is it a real one? I don't know, we're dealing with something that's a complete "uknown."

Until we develop very low maintenance, or even self-maintaining, labor-worker type robots, there will always be a need for someone to go somewhere and turn a screw or dig a ditch. So, the unskilled labor market will still exist in some form. And, new, low-skill, jobs may even become available. If the panacea of AI and robotic labor truly comes into being, we may even be looking at an economic boom that changes society for the better.

Or.... not.

Last edited by Morkonan on Tue, 7. Nov 17, 23:31, edited 1 time in total.

Where would that information come from? would the source be any different if they were naturally born?Morkonan wrote:But, are they... "human." What about our culture, values, our social arrangements, etc? Can an "AI" teach those? At least, an AI we can imagine and isn't drawing too heavily on "magic" to make it work in such a theorycraft discussion.mrbadger wrote:...A human created from digitally stored DNA would be identical in every respect to one created naturally. Except possibly less prone to being produced from damaged source DNA.

And of what relevance would it be?

You'd need to rely on the computers to educate generation one, whatever they were taught, but I think at first it would be how to science. Not much fun really.

Yes, a lot of that Earth culture and their background would matter you do need to learn from the past, but what would the detailed history of a world or culture light years distant matter to new settlers?

To their descendants I could understand, but not to the first few generations. Gradual introduction of the knowledge would occur I'd imagine, since there'd be no reason to censor it, and humans are curious creatures.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

For them, not much relevance perhaps. But, for us, those who have to make sacrifices in order to make such missions possible?mrbadger wrote:Where would that information come from? would the source be any different if they were naturally born?

And of what relevance would it be?

Convincing people that sacrificing human culture and heritage in order to perpetuate the species... Well, people aren't very concerned with things that don't happen in their back yards and that they may never actually experience. Group Altruism... That's a very difficult thing. We'd have to convince people of the rewards of a higher principle.

And, intentionally or not, you've stumbled upon a common science-fiction trope - Why does Earth Culture matter to us on Planet X? Our social and cultural ideals are superior to those, anyway...Yes, a lot of that Earth culture and their background would matter you do need to learn from the past, but what would the detailed history of a world or culture light years distant matter to new settlers?

I think we're relatively hard-wired in some ways, especially our social imperatives. That doesn't mean those will abandoned. But, there are some things we've adopted that aren't necessarily "hard wired." We've just come to value them. One thing that could be done is to set up certain social plans that we know, from our own experiences, "work" to produce a society that has the best advantages we can plan for. After that, they're on their own.To their descendants I could understand, but not to the first few generations. Gradual introduction of the knowledge would occur I'd imagine, since there'd be no reason to censor it, and humans are curious creatures.

And you've mentioned two more books I've bought this month on Audible and got loaded to listen to next.

I've wanted them for a while, but two credits a month means I need to prioritize. The three extra credits thing is a very occasional treat.

To the history thing. You'd have to think of the priorities of the settlers.

If you slowed their colonies development by insisting each generation spent time and effort learning about their homeworld?

Well that idea might motivate the people working to send off the colony ship to start with, but might also create resentment in the colony itself over time, particularly if it was perceived as having caused delays (and if those delays cost anyone's lives).

You could send all the information, send a computer specialised in teaching history, bias the rest of your education towards 'this would be more valuable if you knew the stuff in the history computer', and let the rest happen naturally, couldn't you?

This is all speculative. They might see the history computer as a handy source of spare parts. Or their normal education computer in the same way.

I've wanted them for a while, but two credits a month means I need to prioritize. The three extra credits thing is a very occasional treat.

To the history thing. You'd have to think of the priorities of the settlers.

If you slowed their colonies development by insisting each generation spent time and effort learning about their homeworld?

Well that idea might motivate the people working to send off the colony ship to start with, but might also create resentment in the colony itself over time, particularly if it was perceived as having caused delays (and if those delays cost anyone's lives).

You could send all the information, send a computer specialised in teaching history, bias the rest of your education towards 'this would be more valuable if you knew the stuff in the history computer', and let the rest happen naturally, couldn't you?

This is all speculative. They might see the history computer as a handy source of spare parts. Or their normal education computer in the same way.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Money spent on a good book is never wasted.mrbadger wrote:And you've mentioned two more books I've bought this month on Audible and got loaded to listen to next.

I've wanted them for a while, but two credits a month means I need to prioritize. The three extra credits thing is a very occasional treat.

You're gonna like the Herot books...To the history thing. You'd have to think of the priorities of the settlers.

You're really gonna like the Herot series...If you slowed their colonies development by insisting each generation spent time and effort learning about their homeworld?

That's a good point. Not just about the delays, but "blaming the people of home for the problems we have encountered here."Well that idea might motivate the people working to send off the colony ship to start with, but might also create resentment in the colony itself over time, particularly if it was perceived as having caused delays (and if those delays cost anyone's lives).

People are "hatched" and grow up on a new world, stewarded by a benevolent AI. The grow up and eventually start having babies. Earth finally receives a transmission from the new colony, generations after it left: "YOU BARTARDS! WHY DIDN'T YOU INCLUDE DIAPER TECHNOLOGY! POOP EVERYWHERE! WHEN WILL IT EVER END? WE DIDN'T KNOW! WE DIDN'T KNOW!" <First and final transmission from Colony Alpha, 2187>

How much do you leave up to chance for what represents an attempt at continuation of the species? Evolution is X2 sagt Bussi auf Bauch, filled with spite and loves nothing. Currently, and only relatively speaking, we've got this strange idea of "individual freedom" and "human rights." Human rights that lists, among other things, freedom of expression. "Expression?" WTF? That's some sort of gosh-darned important idea that most nations of the world have come together to say it's.. a priority?You could send all the information, send a computer specialised in teaching history, bias the rest of your education towards 'this would be more valuable if you knew the stuff in the history computer', and let the rest happen naturally, couldn't you?

Do we warn them that people who perceive they are being oppressed, whether or not its factual, have a dangerous tendency to rise against the ruling classes and cut their heads off? Do we tell them that people who feel that they have no way to affect their own lives eventually end up being easy to manipulate into following just about anyone, no matter how cruel and terrible they really are?

Those are "natural" social tendencies, it seems. In the face of them, stability is only maintained by absolute authority. Do we tell them that, too?

Crap.. there's a sci-fi book with that theme, too, but the title escapes me atm. It's famous, darnit... Anyway, a man/machine/something shepherds a colony of humans, forms their society and... makes mistakes throughout its history, resulting in "bad things," because in the end, he/it isn't "perfect" and has a non-zero chance of making miotakes, no matter what happens.

Tomorrow is speculative.. The next moment is speculative. Traditionally, the error rate increases over time and, at some point, becomes exponential.This is all speculative. They might see the history computer as a handy source of spare parts. Or their normal education computer in the same way.

But, we've got to get some of these things figured out before the decision-point comes. Your OP is a question we need to have answered before we're faced with having to make a decision.

Dear Offspring:Morkonan wrote: That's a good point. Not just about the delays, but "blaming the people of home for the problems we have encountered here."

People are "hatched" and grow up on a new world, stewarded by a benevolent AI. The grow up and eventually start having babies. Earth finally receives a transmission from the new colony, generations after it left: "YOU BARTARDS! WHY DIDN'T YOU INCLUDE DIAPER TECHNOLOGY! POOP EVERYWHERE! WHEN WILL IT EVER END? WE DIDN'T KNOW! WE DIDN'T KNOW!" <First and final transmission from Colony Alpha, 2187>

Whilst we appreciate the depth of your feelings, we feel constrained to point out that "Diaper" was included in the manual, under "D", for Diaper, page 4. It was also included under the alternative name "Nappy", under "N", page 23. A stock of the appropriate articles was included in your colonisation equipment, along with a synthesising device (which we assume that you must have dismantled and cannibalised into a whiskey still or something).

In short,

READ YOUR MANUAL.

<First and final reply from Earth Colony Command, 2188>

Morkonan wrote:What really happened isn't as exciting. Putin flexed his left thigh during his morning ride on a flying bear, right after beating fifty Judo blackbelts, which he does upon rising every morning. (Not that Putin sleeps, it's just that he doesn't want to make others feel inadequate.)

I get it! I'm gonna listen to them nextMorkonan wrote:

You're really gonna like the Herot series...

I had no idea I'd be as engrossed as I am. I initially got them because I thought it was pretty much required reading, but now I want more.

This idea of what to teach the nascent colony is interesting. How could you know exactly what to teach?

The job of a teacher involves more than just spouting information like a tap, you need to make choices about what information, or what level of each type is required.

If an injury has to be done to a man it should be so severe that his vengeance need not be feared. ... Niccolò Machiavelli

Re: What’s your feeling about AI and galactic exploitation/colonisation?

We don't need FTL, just L or very close to it easily be good enough, in fact if you could get close enough to c that the time dilation became REALLY significant you mightn't even need a generation ship.mrbadger wrote:We might get FTL, that Alcubierre "warp" drive, if it ever works, in which case galactic colonisation without AI assistance would become possible, but still much easier with them helping out.

More realistic but still substantial sublight speeds are likely to be good enough to have a decent stab at it. Sure it would then become a long slow laborious process but hey we have time. Even at a relatively modest sublight velocities you could colonise a respectable portion of the galaxy in say 5 million years or so, an absolute sneeze of time in geological terms, let alone galactic ones.

Probably in much the same way evolution did.. . . . largely by accident.pjknibbs wrote:Overall, I'm not even convinced we'll create true AI anytime soon. We don't really understand how a bunch of neurons creates intelligence and consciousness in ourselves, how are we supposed to create something similar?

In fact I'm pretty sure that iterative models based upon evolutionary principles is one of the major ways AI is being pursued, its main advantage being you don't really need to have very much idea HOW to do what your aiming for, just pick what you feel is a logical starting state, apply selection pressure and allow mutation. . . . . slow though.

"Shoot for the Moon. If you miss, you'll end up co-orbiting the Sun alongside Earth, living out your days alone in the void within sight of the lush, welcoming home you left behind." - XKCD

"Machiavelli in Context?"mrbadger wrote:I get it! I'm gonna listen to them nextMorkonan wrote:

You're really gonna like the Herot series...right after I finish listening to a lecture series on Machiavelli (Machiavelli in Context).

A friend of mine has read "The Prince" and constantly tries to build his empire. I've tried to say, nicely, that he needs a bit of perspective on "The Prince."

Machiavelli, like Sun Tzu, wanted a friggin job... Preferably, a job with a nice fat patron involved.

"Teach a man to fish..."This idea of what to teach the nascent colony is interesting. How could you know exactly what to teach?

The job of a teacher involves more than just spouting information like a tap, you need to make choices about what information, or what level of each type is required.

In other words, you teach them skills they can use to gain knowledge of whatever they encounter as well as some common measures to take to ensure survival so far from a suitable environment, then you rely heavily on their own intelligence to make sense of it all, given they are armed with the tools you've given them.

I don't remember any "How to learn stuffs" classes in college. We had "orientation" classes like "Here's the library, where you will hate going" and "Here's the activity center and cafeteria, where everyone goes, eventually, to hide from their profs."

Teaching someone how to learn, how to investigate, giving them example of previous knowledge, examples of how certain natural systems work, etc, and how to use or make the tools necessary to investigate novel encounters, and doing so safely, is the best we could hope to do.

PS - There are tons of "brave new colonists" books out there. And, there are just as many "after the fall" books, where colonies degenerate into strange practices, usually something of a medieval culture or even one based entirely on some novel thing it encounters on the planet. There are also plenty of "Robinson Crusoe on Mars" types of books, too, which cover basic survival on an unknown alien world and the like. The "Herot" series starts off with a sort of roundup of events a few months/years after the first generation of colonists have been awakened.

Re: What’s your feeling about AI and galactic exploitation/colonisation?

You say that like it's easy to get arbitrarily close to c, but the energy requirements to do so are colossal and there's no way we have any reasonable way of generating those amounts of power. Even 100% perfect fusion would require most of your ship to be fuel to get anywhere near C.Bishop149 wrote: We don't need FTL, just L or very close to it easily be good enough, in fact if you could get close enough to c that the time dilation became REALLY significant you mightn't even need a generation ship.